We make AI work

From MLOps to LLMOps and AgentOps to AI platforms

We make AI work: From MLOps to LLMOps and AgentOps to AI platforms

The path from a promising machine learning model to a stable, scalable AI solution is complex. Data changes, models become outdated, new requirements arise. At the same time, expectations regarding speed, traceability and security are increasing.

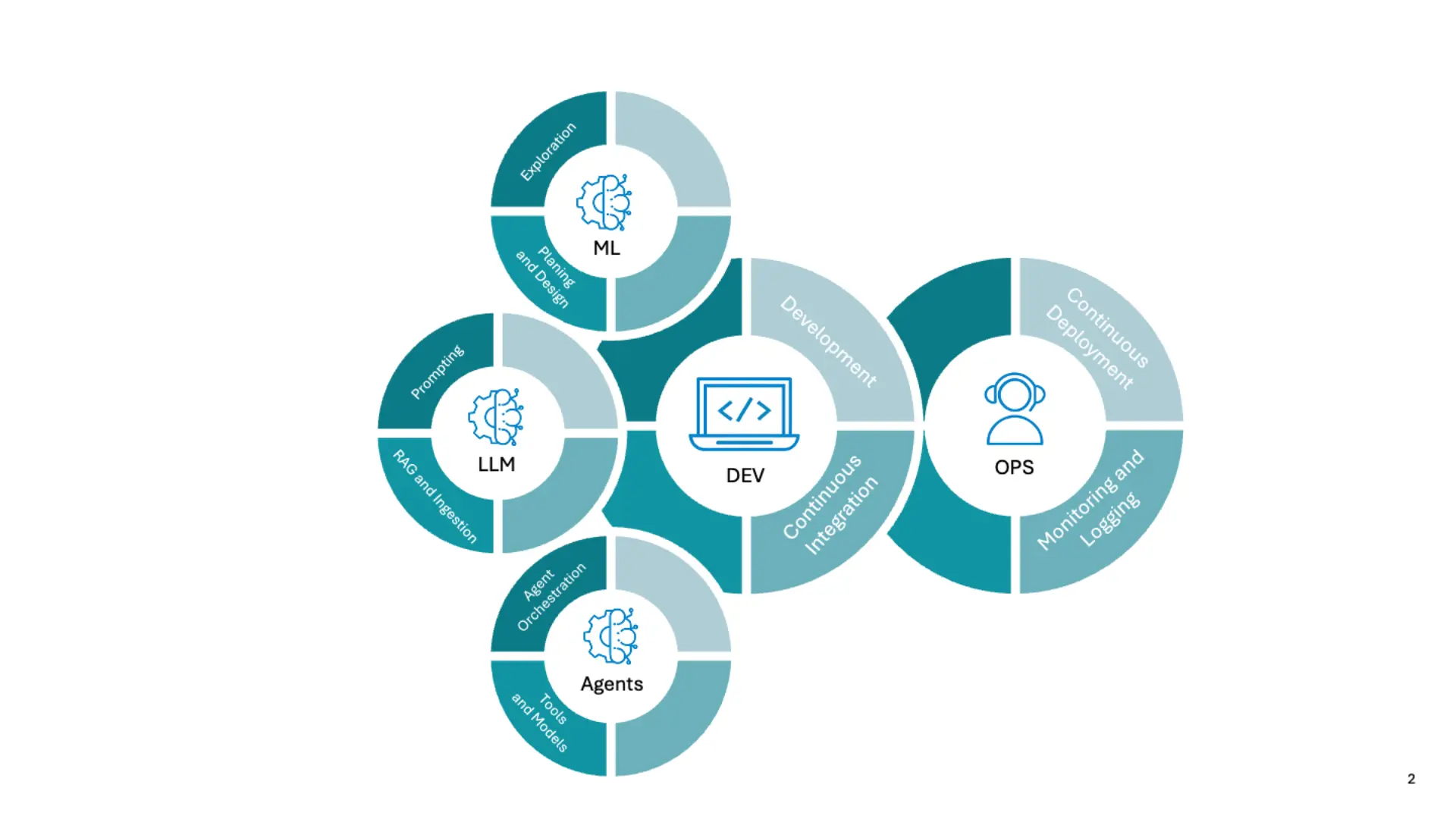

MLOps, LLMOps and AgentOps create the foundation for reproducible development, controlled deployment and continuous model lifecycle management.

We develop operating models, platforms and processes that provide holistic support for AI. From exploration and deployment to monitoring in productive operation. This makes ML models, LLM solutions and agent systems reliable, secure and economical to operate.

Your contact

"AI without MLOps, LLMOps and AgentOps is like a Formula 1 car without a pit crew: impressive on paper, but not roadworthy in reality."

From exploration to operation: How production-ready and reliable AI is created

Dimensions for scalable AI systems

Everything starts with a structured analysis of the use case: What data is available? Which KPIs need to be met? Which risks exist? In this phase, we ensure clarity about technical value, feasibility, and economic viability.

We evaluate model and architecture options, define success criteria, and establish the foundations for later operating models. This prevents PoCs from ending in dead ends or becoming non-production-ready.

A stable MLOps ecosystem requires clear roles and responsibilities. Together we define tasks such as Model Owner, Data Steward, engineering roles, deployment standards, and governance mechanisms.

Through a consistent process landscape (from development to review to approval), we create transparency and security. This ensures that models not only work technically but are embedded in the organization and remain auditable.

In the development phase, models, pipelines, and feature stores are created. We rely on reproducible workflows, version control, structured test procedures, and metadata tracking — so that experiments remain traceable and models can be efficiently reused.

We integrate modern frameworks and ensure that models are built, documented, and evaluated consistently. Predictability replaces luck.

Continuous integration ensures that code, data pipelines, features, tests, and models work together as a single unit. We establish standards for automated tests (data quality, model behavior, contracts) and ensure that every change is validated safely.

The goal is a predictable and reliable development ecosystem where quality is never a coincidence.

Modern AI requires a stable delivery process: versioning, rollback mechanisms, canary deployments, approval processes, and secure model registry.

We build deployment pipelines that securely integrate ML models, LLM applications, or agents into productive systems — on-premises, cloud, or edge. Automation reduces risk, cost, and time-to-production.

After deployment, an important phase begins: monitoring. We track model quality, data changes, performance, failures, latency, costs, bias, and explainability signals.

With drift detection and automated retraining pipelines, models remain stable and adaptable. Operational excellence means: AI behaves reliably, traceably, and in a controlled manner — even in continuous operation.

Agent and LLM-based systems require their own operating models. In addition to classical ML artifacts, roles, rules, tools, prompt profiles, coordination logic, and action sequences must also be versioned and monitored.

We establish dedicated AgentOps processes: simulation tests, action logs, tool-usage monitoring, cost tracking, hallucination detection, and validation mechanisms.

This combines the agents’ autonomy with safety, governance, and auditability.

High-performing AI requires a platform that connects data, models, tools, security mechanisms, and operational processes: a true AI ecosystem. We design platform architectures based on hyperscaler platforms, open-source technologies, or hybrid approaches that enable end-to-end processes.

These systems become the central place where models are developed, monitored, and deployed. They enable reuse, standardization, and long-term scalability.

How platforms and ecosystems support the development of AI use cases:

Our Service Offering

- Implementation of MLOps, LLMOps and AgentOps processes

- Development of reproducible ML and LLM pipelines

- Setup of CI/CD for models, pipelines, LLM workflows, and agents

- Integration of model registry, feature stores, monitoring & observability

- Architecture & implementation of AI platforms (Azure, Databricks, Kubernetes)

- Governance, role models & operating models for AI organizations

Results & Added Value

- Faster, more secure, and more controlled rollout of AI solutions

- Significantly reduced costs through automation

- Lifecycle management for models, LLMs, and agents

- Higher quality and stability in production environments

- Future-proof AI ecosystems that grow with the business