SQL Optimization

in Just a Few Steps

Numerous performance challenges can be addressed with a few small tweaks. The tips below illustrate how to effectively optimize queries to maximize their performance potential.

7 tips for faster queries in distributed databases

Inefficient queries as a real performance killer

Many performance issues arise not from sluggish hardware, but from inefficient SQL queries. But these inefficiencies often go unnoticed when dealing with small datasets. Over time, data and the query execution times grow. The optimal solution can only be identified on an individual basis. However, implementing the following suggestions can greatly enhance SQL performance.

Basic optimizations

1. Not enough filter criteria:

Often, only a particular data range from the joined tables is required. By narrowing down the data to specific values, the amount of data that needs processing is reduced.

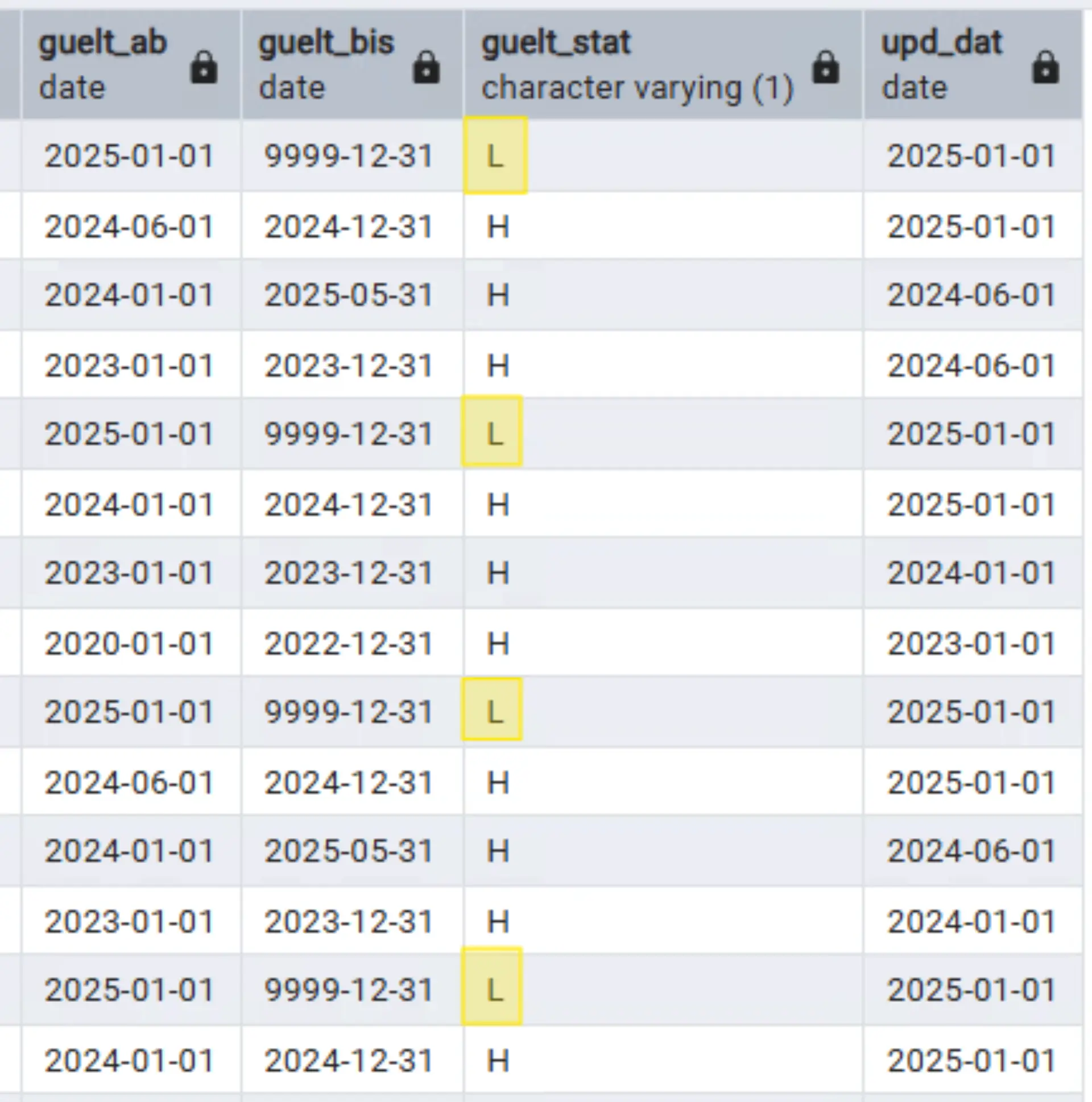

For instance, historicized tables can typically be restricted to the most recent valid data (as shown in the image, the rows with guelt_stat = L). This can significantly decrease the number of rows, as a vast amount of data accumulates over the years.

2. Inadequate join condition:

When multiple rows correspond on both sides (in a many-to-many relationship), a Cartesian product (cross join) of the two tables is formed. Cross joins are seldom intended – or necessary – and should be avoided whenever possible. Even moderate data volumes can create a substantial load and increase query time. In this case, unique fields should be used for the join. They should also have the same data types for the best performance.

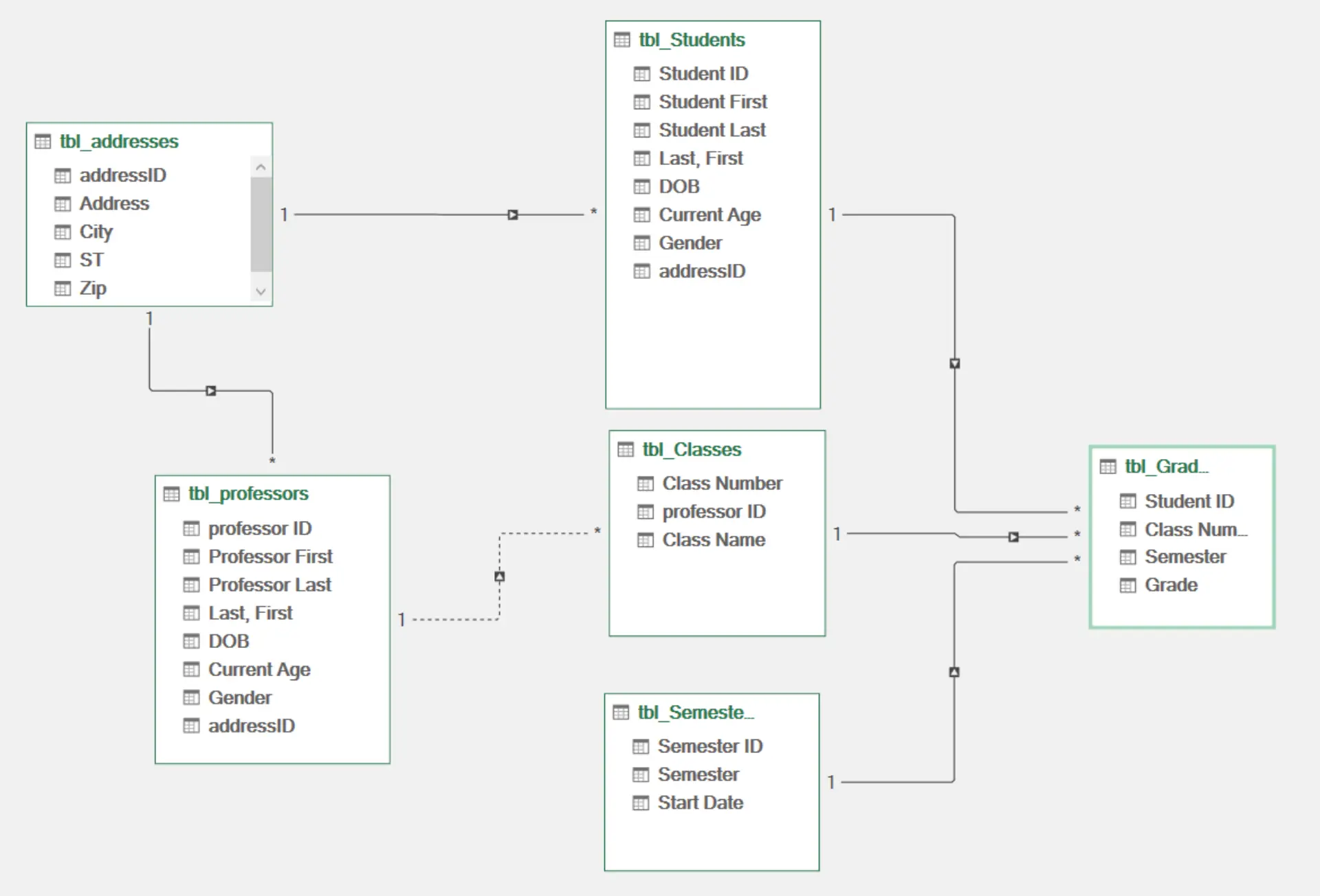

3. Bad data model:

Optimized SQL queries won't provide substantial benefits if the data model isn't carefully designed or prepared for future needs. Excessive or insufficient normalization can cause performance issues. Data types should be chosen carefully from the start. Often, these data models are dictated by the source systems and cannot be changed. In this case, the data should be gradually adjusted to fit a high-performance data model. Typically, there are data engineers within the organization who can assist with this optimization.

Specific optimization for distributed databases using Greenplum as an example

1. Perform table analysis:

Understanding the data structures and statistics is crucial for the optimizer. This includes identifying the most common column values and estimating data distribution, for instance. Such information assists the query planner in formulating the most effective execution plan for queries. However, in some situations, explicit analysis should not be carried out (e.g., between directly consecutive inserts, updates, or deletes).

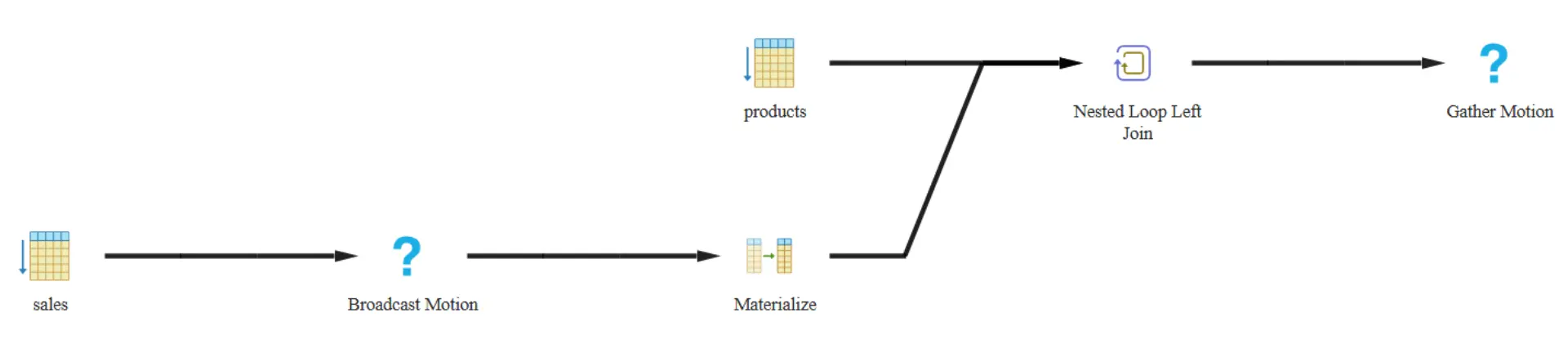

2. Avoid sub-selects:

The result set generated by the sub-select is distributed to all segment servers through a database broadcast. Depending on its size, this can lead to considerable performance drawbacks. Instead, sub-selects should be moved to previous temporary tables and then joined in the actual select.

3. Optimize distribution:

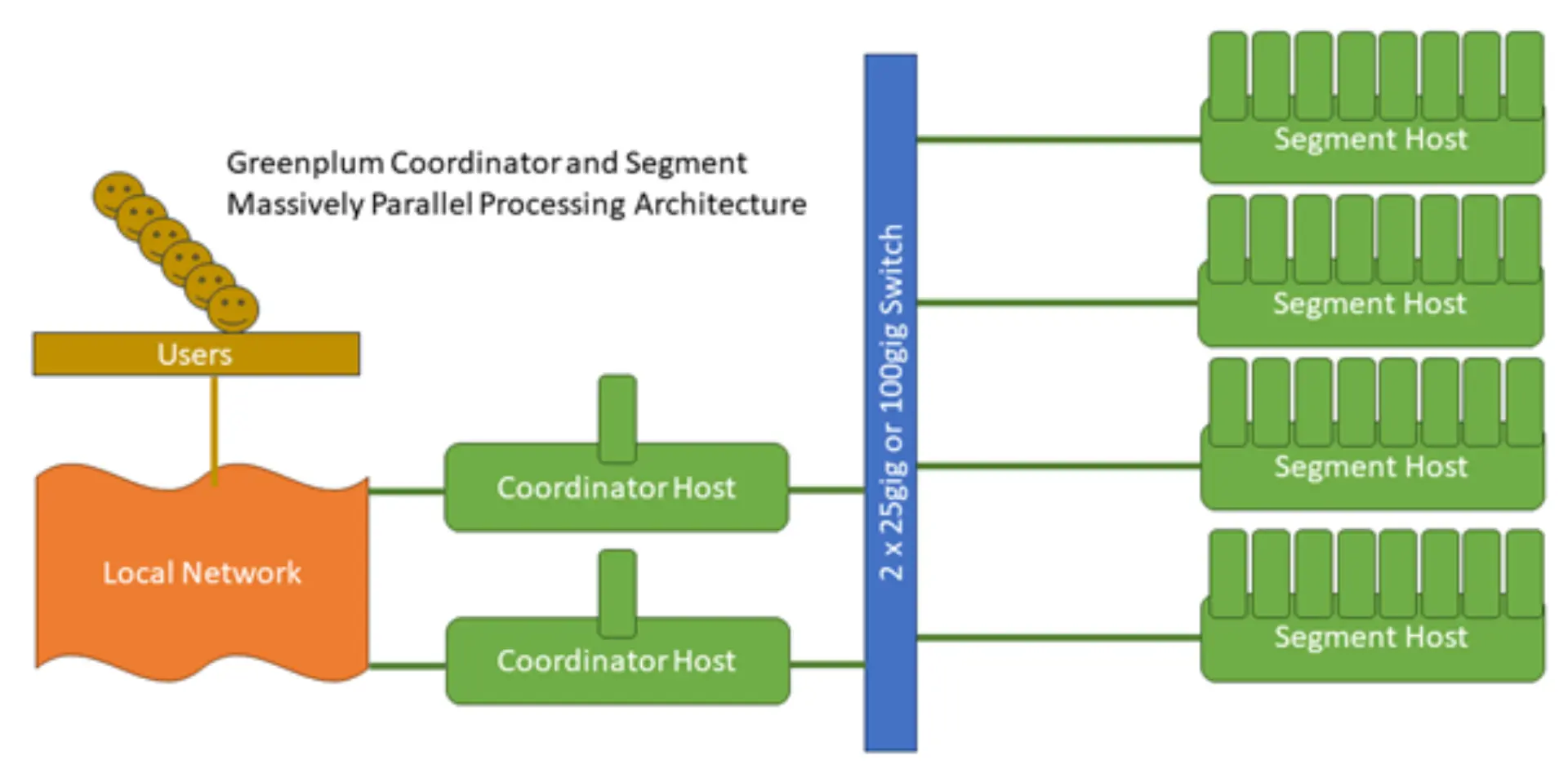

The distribution key distributes data to all segment servers of the Greenplum database. This key is stored at the end of table definitions with the phrase distributed by <column>. The values in this column should be a multiple of the number of segment servers; otherwise, it can cause uneven distribution and increased query times. Individual values may interfere with the uniform distribution, particularly if there is a high number of NULLs.

Source: https://techdocs.broadcom.com/us/en/vmware-tanzu/data-solutions/tanzu-greenplum/7/greenplum-database/admin_guide-intro-arch_overview.html, 08.10.2025

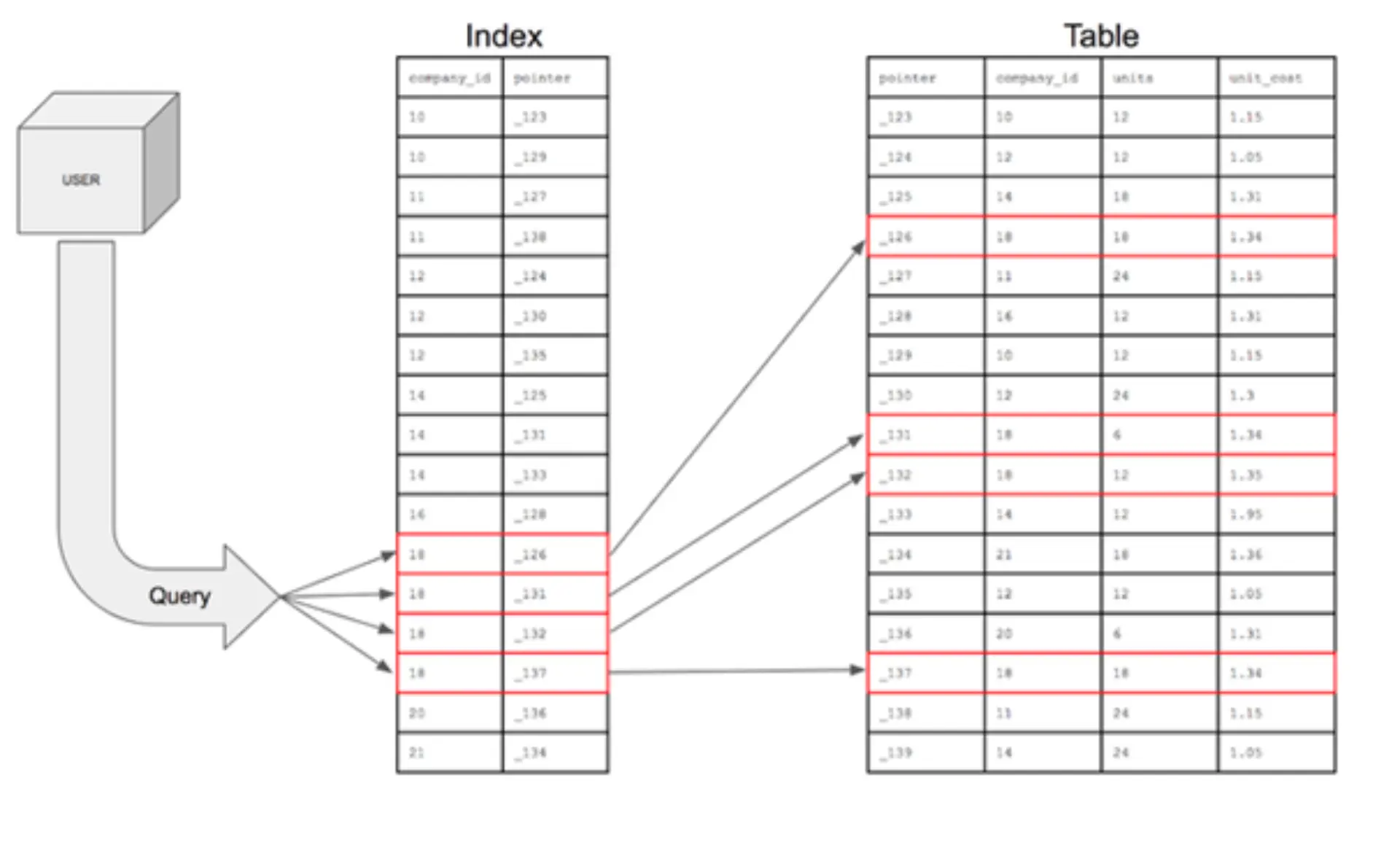

4. Effective indexing:

In conventional databases, indexes can greatly enhance data retrieval speed. Nonetheless, in a database that is spread across multiple segments, like Greenplum, this should be used judiciously.

Greenplum can execute rapid sequential scans, which is why performance tends to be superior for BI applications without indexing when handling vast datasets. Even with numerous regular write operations, indexing generates greater effort due to the maintenance of the index.

However, there are instances where indexing can also enhance query performance in GreenplumDBs.

Source: https://www.atlassian.com/data/databases/how-does-indexing-work, 08.10.2025

Conclusion

There are many ways to boost query performance. Nonetheless, when addressing specific situations, it is crucial to consider the database conditions and the data model. With varying configurations, intended optimizations may prove to be ineffective. Thus, it is vital to consult with the database administrator and data engineers to determine the best strategy for your organization/area/application.

Do you have any questions?

Marcel Dittmann

IT Consultant