Need realistic test data yesterday?

How we scaled to 900 GB in 2 hours using Databricks

Making data generation simple and reproducible

In the cloud data era, accessing production-like datasets for testing remains a major challenge, often restricted by stringent security and privacy concerns. Reliable artificial datasets are critical for evaluating how cloud data platforms perform and work under real-world conditions. At msg, we leverage Spark on Databricks to generate high-quality, synthetic datasets, enabling data-driven decisions that address customer-specific needs effectively.

Our objective was to design a repeatable data generation process that allows users to produce identical results using the same configuration, ensuring consistent testing. A well-defined data model serves as the foundation of this process, outlining the required tables and their interconnections to maintain consistency and referential integrity. With Databricks, we efficiently set up a Spark environment with minimal configurations, providing the reliability and repeatability essential for robust data generation.

Current challenges of data generation

Modern big data tools are designed to handle vast amounts of data, reflecting the growing scale of production data systems. To provide meaningful evaluations for real production systems, artificial datasets must closely replicate production-like data. This ensures that benchmarking technologies and processing engines yield insights that are directly applicable to real-world scenarios. Achieving referential integrity is particularly challenging in big data environments due to the dependencies between tables. These dependencies prevent full distribution of workloads, which becomes even more problematic at scale, as not all processes can run in parallel. To thoroughly assess compatibility and compression, datasets must be generated in multiple formats and with diverse data types, ensuring they resemble real-world scenarios. Additionally, testing performance across formats provides insights into the efficiency of processing engines under varied conditions.

Why Databricks?

Databricks, with its managed Spark service, provided an ideal platform for artificial data generation, due to the following advantages:

- Managed Service: Databricks simplifies the setup and management of Spark, enabling us to deploy and configure the environment quickly without administrative overhead

- Low-Level Coding Capabilities: Spark allows direct access to low-level APIs like RDDs, enabling precise control over data processing workflows and making it suitable for complex data generation tasks.

- Horizontal Scalability: Spark’s distributed computing capabilities, combined with Databricks’ dynamic cluster management, allowed us to scale horizontally and handle large datasets efficiently.

- Efficient Custom Code: With Scala, a JVM-based language, we implemented high-performance UDFs that enhanced the efficiency and flexibility of the data generation process.

- Cloud Agnostic: Databricks integrates seamlessly with all major cloud providers, ensuring flexibility and avoiding vendor lock-in.

- Cost Efficiency: Databricks’ consumption-based pricing ensured that we only paid for the resources used during data generation, while job clusters optimized costs by scaling resources on demand.

By leveraging Databricks and Spark, we established a robust, scalable, and cost-efficient solution that meets the high demands of artificial data generation for benchmarking and testing.

Sample target data model

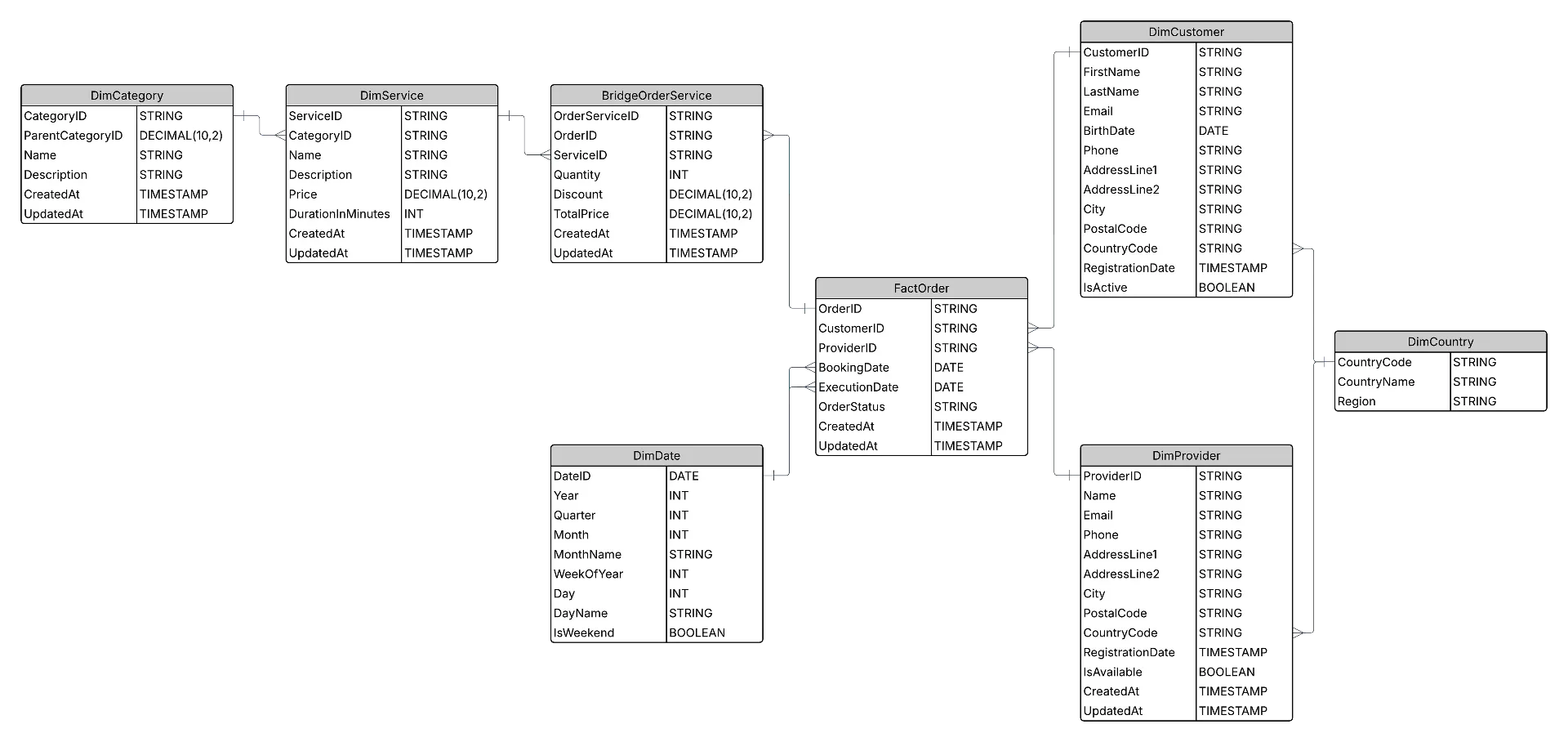

The following graphic illustrates the data model, showing the relationships between the key tables, such as Countries, Customers, Providers, Orders, Services, and more.

Data model used for the synthetic data generation

While the specific data model is not critical, as any model with multiple relationships could serve this purpose, we chose this example because it incorporates various data types, including UUIDs, and provides clarity for understanding the code and approach used.

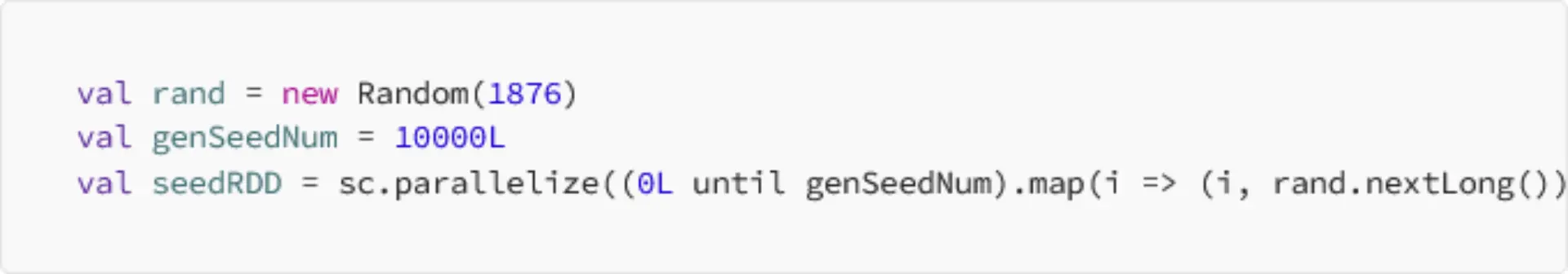

Data generation via Spark’s RDDs

Spark’s abstraction of Resilient Distributed Datasets (RDDs) enables efficient distributed data processing across multiple nodes, a capability we leveraged to develop a high-performance data generation process. To ensure reproducibility and determinism in our dataset, we initialized an instance of the Random class with a predefined seed. This seeded random generator was used to create an RDD with seed values, enhancing parallelism during data generation while maintaining the uniqueness of the resulting dataset:

To uniquely identify table rows, we adopted UUIDs, which are widely used in real-world systems. While this approach guarantees unique records, it introduces the challenge of maintaining consistent relationships between tables. Without such consistency, foreign key references would lack alignment, undermining the dataset’s utility for benchmarking purposes.

To address this, we incorporated a temporary index column during the data generation process. This index ensures that UUIDs for foreign keys are not generated independently but are derived from the referencing table’s index. By doing so, we preserved relational integrity across the dataset, enabling robust benchmarking capabilities.

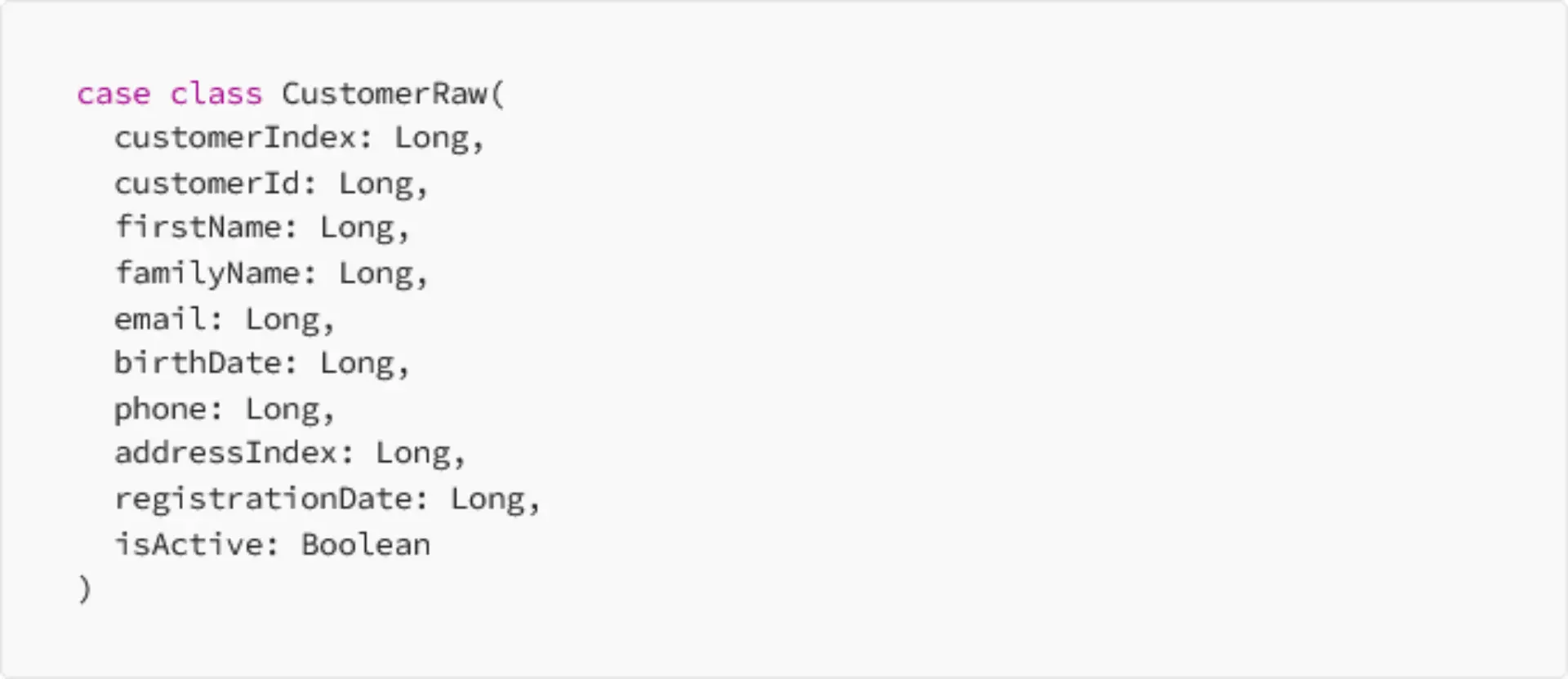

To maintain integrity across the dataset and enable robust benchmarking, we first generated tables without references, establishing relationships by linking foreign keys in subsequent steps. To illustrate the data generation process we use the Customers and Orders tables as an example. We started by defining a class to structure the raw customer data, utilizing Long values for their efficiency in generating random distributions, with the actual values created in the subsequent step:

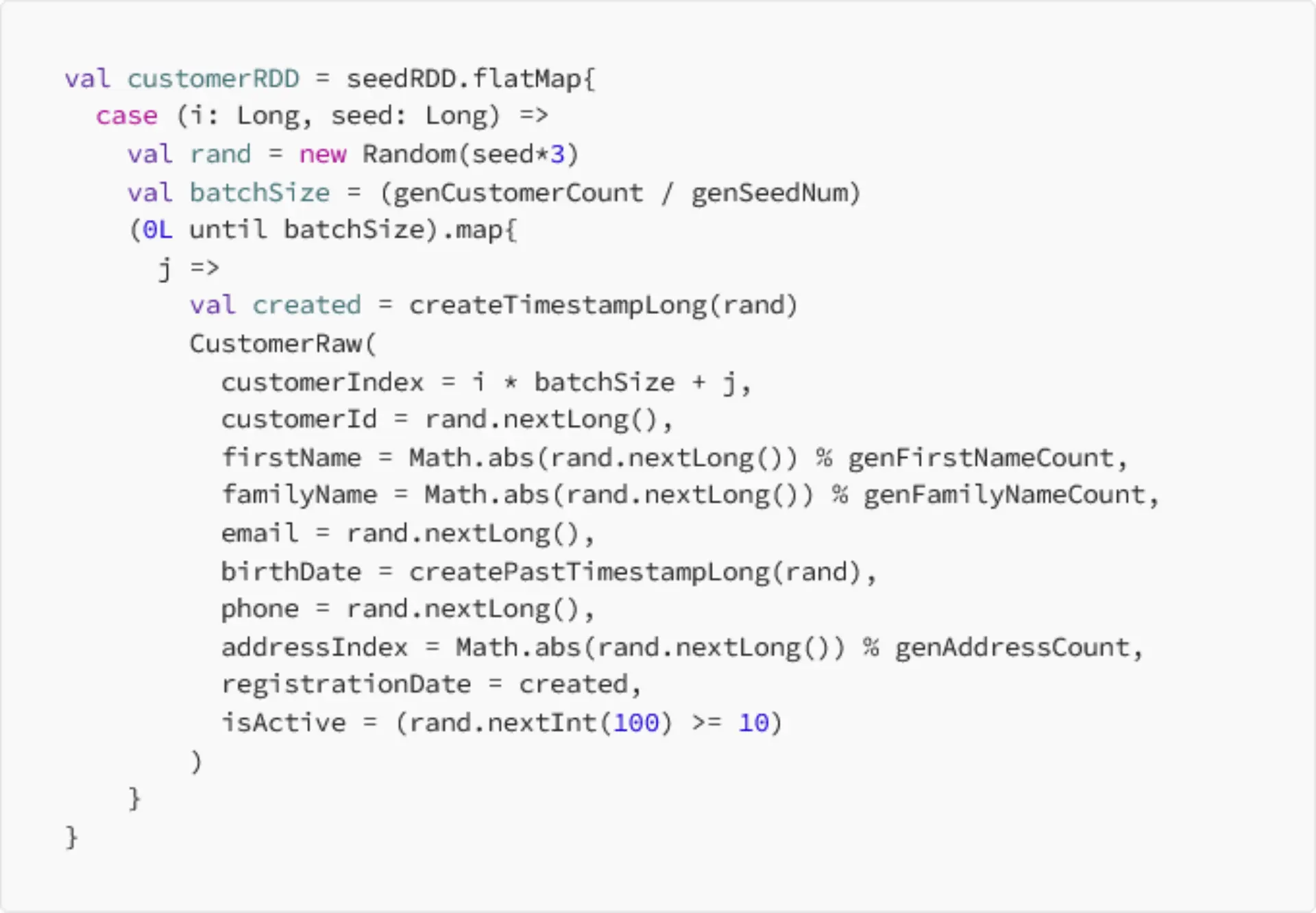

Using the seedRDD, we generated batches of presudo-random seed values aligned with its size. This allows the reproducibility of data sets. This means that running the algorithm twice will yield the same data sets with the same values.

These numbers were then transformed into a Spark DataFrame and processed through helper functions to create synthetic yet meaningful values, such as valid emails (with “@” symbols), formatted birthdates and UUIDs. Lookup tables, such as those containing names and addresses, further enhanced the contextual relevance of the data.

This approach ensures a deterministic and scalable data generation process, allowing us to maintain both uniqueness and reproducibility in artificial datasets.

Managing the relationship between tables

The creation process for the Orders table follows a similar approach to the Customers table, utilizing the seedRDD to generate randomized data in batches while maintaining consistency and reproducibility. However, an additional step was incorporated to ensure referential integrity between the Orders and Customers tables. During data generation, the customerIndex was included as a temporary field, allowing the Orders table to reference the corresponding customer record accurately. By joining the Orders table with the Customers table on this index, we retrieved the UUID of each customer to populate the foreign key column in the Orders table. This method ensured that all relationships were correctly established, aligning with the relational integrity requirements of the data model. Omitting the explicit code for brevity, this streamlined process highlights the importance of designing interconnected datasets for robust benchmarking and testing.

After generating the dataset, we were able to write the tables out in multiple file formats — CSV, JSON (compressed with gzip) and Parquet (compressed with snappy), to optimize storage for various use cases. This approach:

- Emulates a real-world data platform that stores data in diverse formats

- Enables testing and benchmarking with different compute engines and analytical workflows

- Keeps storage costs low by compressing files, which is beneficial for large-scale data.

- Allowed us to create almost 89 GB of data (compressed) in about 27 minutes (Azure Compute: Standard_D8s_v3, West Europe, 6 Workers, 10.5 DBU, Cost: 0.93$)

- Allowed us to create more than 900 GB of data (compressed) in about 2 hours (Azure Compute: Standard_D16s_v3, West Europe, 8 Workers, 27 DBU, Cost: 9.96$)

What did we achieve?

We demonstrated how Databricks, with its managed Spark environment, provides an ideal platform for generating artificial datasets tailored for real-world benchmarking. By creating synthetic data with realistic distributions and referential integrity, we gained the ability to simulate real-world conditions and thoroughly evaluate processing engines and technologies. Databricks’ scalability and flexibility streamlined the process, allowing us to efficiently produce large-scale, high-quality datasets. These capabilities not only enhanced our testing workflows but also empowered us to make data-driven decisions with confidence, ensuring alignment with real-world demands.